Imagine a gardener tending to a row of plants—each representing a weak model that struggles to grow on its own. The gardener, patient and observant, learns from every wilted leaf and dry stem, improving the soil and water balance with every attempt. That’s what Gradient Boosting Machines (GBM) do in data science: they learn iteratively from their own mistakes, growing stronger with each cycle until the final model becomes a robust forest of accurate predictions.

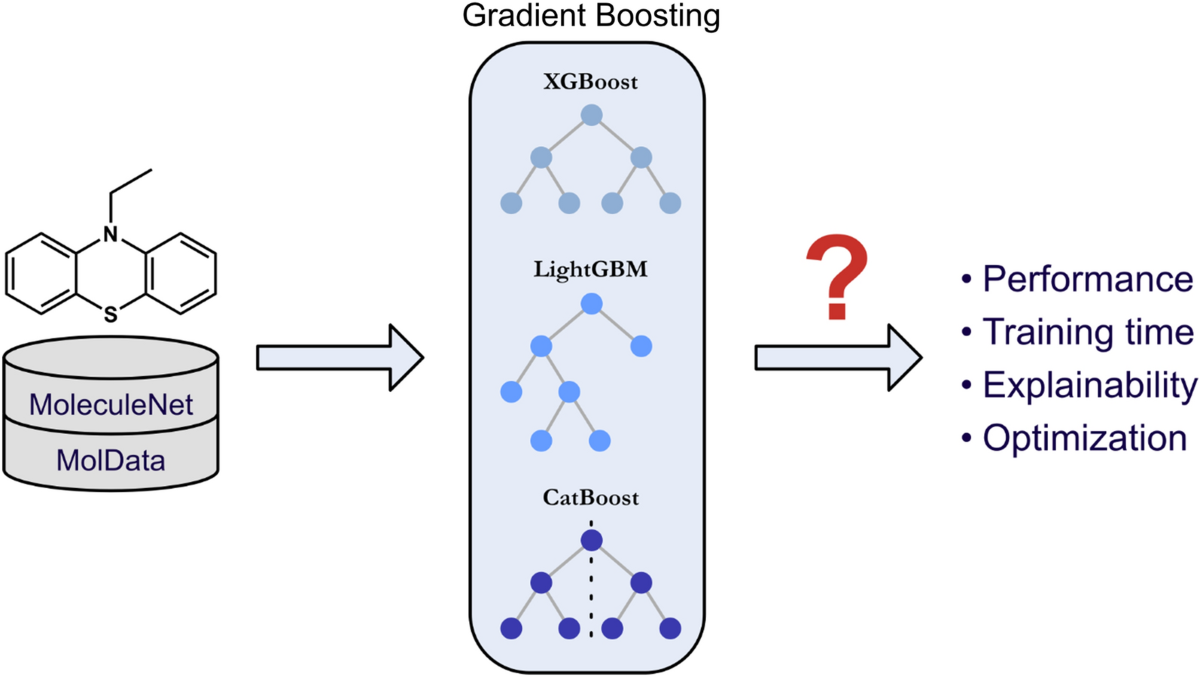

GBMs have revolutionised industries like finance and marketing by blending simplicity and sophistication. Through models like XGBoost, LightGBM, and CatBoost, analysts can uncover hidden patterns, manage complex datasets, and forecast trends with remarkable precision.

Understanding the Essence of Gradient Boosting

At its heart, gradient boosting works by building models sequentially. Each new model attempts to correct the errors made by the previous one, leading to continuous improvement. It’s much like a team where every member refines the previous person’s work, ensuring the collective output becomes sharper and more accurate.

The true strength of GBM lies in its adaptability. It can handle missing data, non-linear relationships, and vast feature spaces—making it invaluable for financial forecasting and customer analytics.

Professionals pursuing a business analyst course in Hyderabad often encounter GBM early in their curriculum. The reason is simple: it’s a cornerstone of modern analytics that bridges statistical thinking with machine learning automation.

XGBoost: The Workhorse of Competitive Modelling

XGBoost (Extreme Gradient Boosting) is known for its speed and performance. It introduces regularisation—essentially preventing overfitting by controlling the model’s complexity. In financial applications, this translates into better risk predictions without the noise of random fluctuations.

Marketing teams also leverage XGBoost to predict customer churn, optimise pricing, or even rank leads based on likelihood to convert. Its parallel processing and scalability make it ideal for enterprise-level operations where speed is as crucial as accuracy.

Much like a seasoned chess player, XGBoost plans every move in advance—balancing aggression (learning quickly) with caution (avoiding errors).

LightGBM: Speeding Through Big Data

As datasets in finance and marketing continue to explode, traditional models struggle with efficiency. LightGBM enters as a solution optimised for speed and scalability. Using histogram-based algorithms, it processes data faster by grouping continuous values into discrete bins.

This makes LightGBM particularly suitable for high-frequency trading, fraud detection, and large-scale customer segmentation. Analysts can run models in minutes instead of hours, making it a favourite in real-time environments.

Courses like a business analyst course in Hyderabad teach learners how LightGBM transforms traditional analytics pipelines—helping businesses adapt to data-intensive demands without sacrificing precision.

CatBoost: Taming Categorical Features

CatBoost stands out for its ability to handle categorical variables seamlessly. In marketing, where data such as product types, regions, and customer segments dominate, CatBoost eliminates the need for extensive preprocessing.

Its robust encoding methods ensure the model learns relationships without human bias creeping in. This becomes particularly valuable in credit scoring and personalised recommendation systems—where small differences can impact millions of decisions.

CatBoost’s efficiency also lies in its fairness. It reduces overfitting and improves interpretability, making it a reliable choice when models must justify their decisions to regulators or stakeholders.

GBM in Action: Finance Meets Marketing

In finance, GBM models are used for credit risk scoring, fraud detection, and algorithmic trading. They analyse thousands of features—from transaction patterns to macroeconomic indicators—to predict outcomes with high accuracy.

In marketing, these models power campaign optimisation, sentiment analysis, and customer segmentation. By learning continuously from feedback loops, they fine-tune audience targeting and improve ROI.

What unites both sectors is the shared reliance on data-driven adaptability—the ability to learn from mistakes and evolve with every iteration.

Conclusion

Gradient Boosting Machines exemplify how iterative learning and smart algorithms can mimic human intuition. Tools like XGBoost, LightGBM, and CatBoost are not just statistical engines; they are adaptive systems capable of navigating uncertainty, complexity, and scale.

For aspiring analysts, mastering these models means gaining a competitive advantage in two of the most data-rich industries—finance and marketing. By embracing this approach, they step into a future where every prediction isn’t just accurate—it’s intelligently refined.